Conic Programming

Contents

run (fullfile ('..', 'install_vsdp.m'))

Conic Programming¶

Primal and dual form¶

VSDP can handle three self dual convex cones, that often occur in practical applications. These are:

The non-negative orthant:

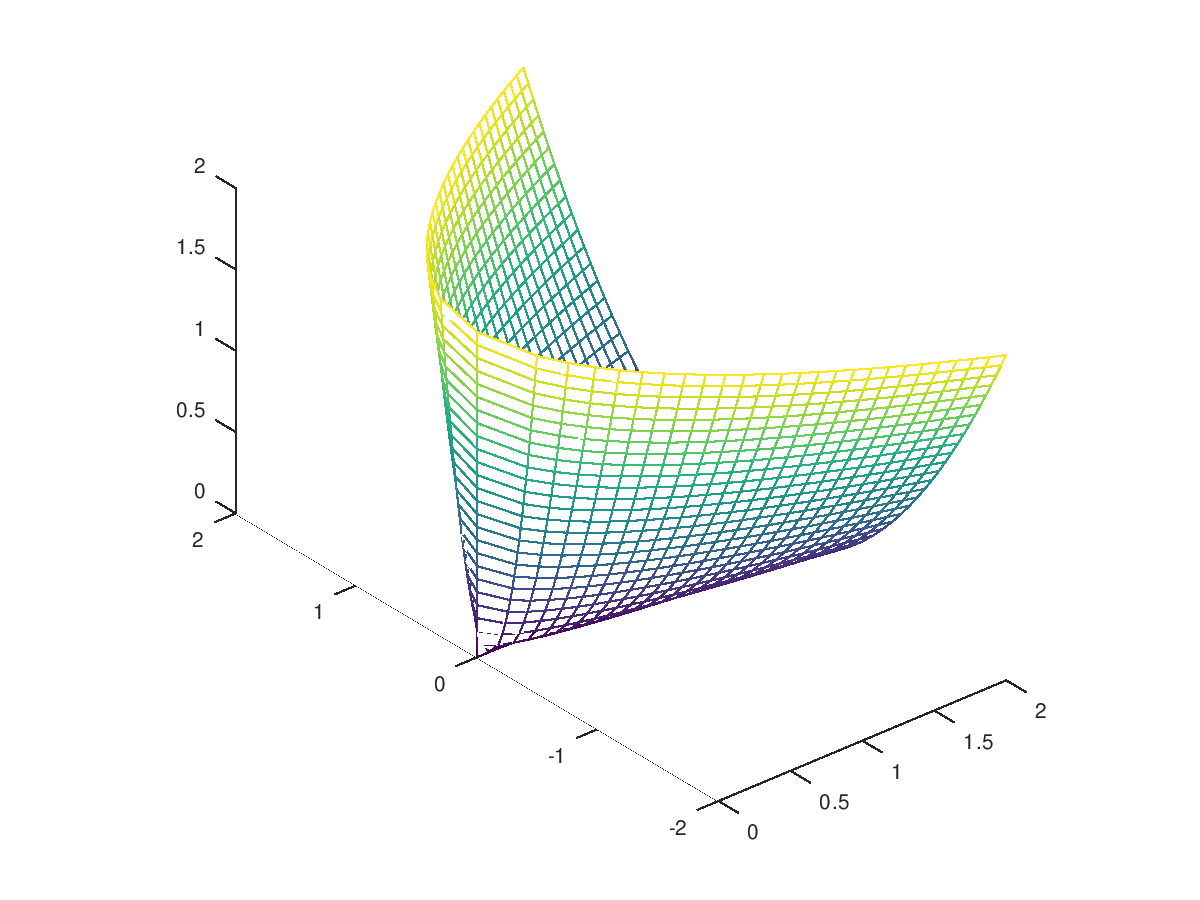

The Lorentz cone (see [1]):

The cone of symmetric positive semidefinite matrices:

If a quantity is in the interior of one of the above cones, the definitions above must hold with strict inequalities.

By \(\langle c, x \rangle := c^{T} x\) the usual Euclidean inner product of vectors in \(\mathbb{R}^{n}\) is denoted. For symmetric matrices \(X, Y \in \mathbb{S}^{n}\) the inner product is given by \(\langle X,Y \rangle := \text{trace}(XY)\).

Let \(A^{f}\) and \(A^{l}\) be a \(m \times n_{f}\) and a \(m \times n_{l}\) matrix, respectively, and let \(A_{i}^{q}\) be \(m \times q_{i}\) matrices for \(i = 1,\ldots,n_{q}\). Let \(c^{f} \in \mathbb{R}^{n_{f}}\), \(c^{l} \in \mathbb{R}^{n_{l}}\), \(c_{i}^{q} \in \mathbb{R}^{q_i}\), and \(b \in \mathbb{R}^{m}\). Moreover, let \(A_{1,j}^{s}, \ldots, A_{m,j}^{s}\), \(C_{j}^{s}\), \(X_{j}^{s}\) be symmetric \(s_{j} \times s_{j}\) matrices for \(j = 1, \ldots, n_{s}\).

Now we can define the conic semidefinite-quadratic-linear programming problem in primal standard form

where \(x^{f}\) are “free variables”, \(x^{l}\) are “non-negative variables”, \(x_{i}^{q}\) are “second-order cone (SOCP) variables”, and finally \(X_{j}^{s}\) are “positive semidefinite (SDP) variables”. The linear operator

maps the symmetric matrices \(X_{j}^{s}\) to \(\mathbb{R}^{m}\). The adjoint linear operator is

The dual problem associated with the primal standard form is

where \(z^{f} \in \lbrace 0 \rbrace^{n_{f}}\), \(z^{l} \in \mathbb{R}^{n_{l}}_{+}\), \(z_{i}^{q} \in \mathbb{L}^{q_i}\), \(i = 1, \ldots, n_{q}\), and \(Z_{j}^{s} \in \mathbb{S}^{s_{j}}_{+}\), \(j = 1, \ldots, n_{s}\).

The objective functions and equality constraints of the primal and dual problem are linear. Thus conic programming can be seen as an extension of linear programming with additional conic constraints.

By definition the vector \(x^{f}\) contains all unconstrained or free variables, whereas all other variables are bounded by conic constraints. In several applications some solvers (for example SDPA or CSDP) require that free variables are converted into the difference of non-negative variables. Besides the major disadvantage that this transformation is numerical unstable, it also increases the number of variables of the particular problems. In VSDP free variables can be handled in a numerical stable manner.

Condensed form¶

Occasionally, it is useful to represent the conic programming problem in a more compact form by using the symmetric vectorization operator. This operator maps a symmetric matrix \(X \in \mathbb{S}^{n}\) to an \(n(n + 1)/2\)-dimensional vector

where \(\alpha\) is a scaling factor for the off diagonal elements. The inverse operation is denoted by \(smat\) such that \(smat(svec(X, \alpha), 1/\alpha) = X\). For a vectorized quantity \(x\), scaled by \(\alpha\), we often write \(x \in \mathbb{S}^{n}\) as abbreviation for \(smat(x, 1/\alpha) \in \mathbb{S}^{n}\).

By using \(svec\) it is possible to map each symmetric matrix to a vector quantity. Additionally, by using the scaling factor \(\alpha = 1\) for all \(A_{kj}^{s}\), \(C_{j}^{s}\), and \(Z_{j}^{s}\) and the scaling factor \(\alpha = 2\) for all \(X_{j}^{s}\), the inner product of symmetric matrices reduces to a simple scalar product of two vectors. Thus all cones can be treated equally.

The condensed quantities \(c\), \(x\), and \(z\) are \(n \times 1\)-vectors:

where \(n = n_{f} + n_{l} + \sum_{i = 1}^{n_{q}} q_{i} + \sum_{j = 1}^{n_{s}} s_{j}(s_{j} + 1)/2\). \(A^{T}\) becomes an \(n \times m\) matrix

Let the constraint cone \(K\) and its dual cone \(K^{*}\) be

With these abbreviations we obtain the following block form of the conic problem:

with optimal value \(\hat{f}_{p}\) and the corresponding dual problem

with optimal value \(\hat{f}_{d}\).

In VSDP each conic problem is fully described by the four variables (A, b, c, K).

The first two quantities represent the affine constraints \(Ax = b\).

The third is the primal objective vector c,

and the last describes the underlying cone.

The cone K is a structure with four fields: K.f, K.l, K.q, and K.s.

The field K.f stores the number of free variables \(n_{f}\),

the field K.l stores the number of non-negative variables \(n_{l}\),

the field K.q stores the dimensions \(q_{1}, \ldots, q_{n_{q}}\) of the second order cones,

and similarly K.s stores the dimensions \(s_{1}, \ldots, s_{n_{s}}\) of the semidefinite cones.

If a component of K is empty,

then it is assumed that the corresponding cone does not occur.

It is well known that for linear programming problems strong duality \(\hat{f}_{p} = \hat{f}_{d}\) holds without any constraint qualifications. General conic programs satisfy only the weak duality condition \(\hat{f}_{d} \leq \hat{f}_{p}\). Strong duality requires additional constraint qualifications, such as Slater’s constraint qualifications (see [24] and [28]).

Strong Duality Theorem

If the primal problem is strictly feasible (i.e. there exists a primal feasible point \(x\) in the interior of \(K\)) and \(\hat{f}_{p}\) is finite, then \(\hat{f}_{p} = \hat{f}_{d}\) and the dual supremum is attained.

If the dual problem is strictly feasible (i.e. there exists some \(y\) such that \(z = c - (A)^{T} y\) is in the interior of \(K^{*}\)) and \(\hat{f}_{d}\) is finite, then \(\hat{f}_{d} = \hat{f}_{p}\), and the primal infimum is attained.

In general, the primal or dual problem formulation may have optimal solutions while its respective dual problem is infeasible, or the duality gap may be positive at optimality.

Duality theory is central to the study of optimization. Firstly, algorithms are frequently based on duality (like primal-dual interior-point methods), secondly, they enable one to check whether or not a given feasible point is optimal, and thirdly, it allows one to compute verified results efficiently.

Interval arithmetic¶

For the usage of VSDP a knowledge of interval arithmetic [23] is not required, but some error bound outputs are intervals. Therefore we give a very brief introduction to interval formats. An interval vector or an interval matrix is defined as a set of vectors or matrices that vary between a lower and an upper vector or matrix, respectively. In other words, these are quantities with interval components.

In INTLAB [22] these interval quantities

can be initialized with the infsup-function.

format infsup short

x = infsup (-1, 2)

intval x =

[ -1.0000, 2.0000]

Equivalently,

these quantities can be defined by a midpoint-radius representation,

using the midrad-function.

format midrad short

y = midrad (0.5, 1.5)

format infsup short

y

intval y =

< 0.5000, 1.5000>

intval y =

[ -1.0000, 2.0000]